cifar image classification

using popular deep learning architectures

Classify images from the CIFAR-10 dataset using a variety of modern architectures.

Project Overview

This project implements a training and testing pipeline for an image classification task on the CIFAR-10 dataset. CIFAR-10 contains 60,000 32x32 RGB images distributed evenly across 10 image classes (6,000 images per class). The provided dataset splits consists of a train set with 50,000 images and a test set with 10,000 images. Here, the train set is further split into a train set with 45,000 images and a validation set with 5,000 images to allow for model evaluation throughout the training process. The models implemented in this repository includes a basic CNN, a resnet, and a vision transformer.

Setup and Run

The repository contains both a python script and a Jupyter notebook. Each of their setup/run procedures are detailed below.

Python Script

Clone the repository.

git clone git@github.com:joe-lin-tech/cifar.git

cd cifar

Create and activate a virtual environment. (Alternatively, use an existing environment of your choosing.)

python3 -m venv venv

source venv/bin/activate

Install required pip packages and dependencies.

python3 -m pip install -r requirements.txt

Login to a wandb account if you’d like to view train logs. (If not, make sure to toggle respective flag when running.)

wandb login

Your local environment should now be suitable to run the main script train.py. You can either run it interactively or use the shell to specify run options.

Run Interactively

python3 train.py

Run in the Shell

python3 train.py -m previt -d cuda

The above command fine tunes a vision transformer pretrained on ImageNet with hyperparameters set to those used in this project. For reproducibility tests, specifying shell -m and shell -d like above will be sufficient. Additional specifiers detailed below.

python3 train.py -m resnet -e 50 -b 128 -l 0.1 -d cuda

As an example of a more customized run, the above command trains a resnet-based model on cuda for 50 epochs with batch size of 128 and initial learning rate of 0.1.

| Specifier | Usage |

|---|---|

-m, --model | choose model architecture (cnn, resnet, previt, or vit) |

-e, --epoch | number of epochs |

-b, --batch-size | batch size |

-l, --learning-rate | learning rate |

-d, --device | device |

-c, --cross-validate | flag for training with 5-fold cross-validation (default: False) |

-w, --wandb | flag for wandb logging (default: False) |

-s, --save-folder | path to desired model save folder (default: current working directory) |

-f, --ckpt-frequency | how often to save model checkpoint, in number of epochs (default: 0, save final) |

Jupyter Notebook

Download the Jupyter notebook and run the first cell to import relevant packages. The following Python packages are used for this project and may need to be installed directly (if not installed in current environment) with !pip install <package name>.

- General Purpose: For shuffling and seeding random processes, use

random. To read and write to local file system, useos. - Data Manipulation: Use

numpyto represent and manipulate data. - Machine Learning: Use

torchandtorchvision, which are suitable for Computer Vision tasks. For logging the training loop, usewandb.

Run the remaining cells to execute the training procedure of the latest notebook version (pretrained vision transformer).

Model Architecture and Training

Basic CNN Architecture

This implementation consists of 3 convolutional layers (conv + relu + max pool) and a fully connected network.

| Layer | Parameters |

|---|---|

nn.Conv2d | in_channels = 3, out_channels = 8, kernel_size = 5, stride = 1, padding = 2 |

nn.MaxPool2d | kernel_size = 2, stride = 2 |

nn.Conv2d | in_channels = 8, out_channels = 16, kernel_size = 5, stride = 1, padding = 2 |

nn.MaxPool2d | kernel_size = 2, stride = 2 |

nn.Conv2d | in_channels = 16, out_channels = 32, kernel_size = 5, stride = 1, padding = 2 |

nn.MaxPool2d | kernel_size = 2, stride = 2 |

nn.Linear | in_channels = 512, out_channels = 64 |

nn.Linear | in_channels = 64, out_channels = 32 |

nn.Linear | in_channels = 32, out_channels = 10 |

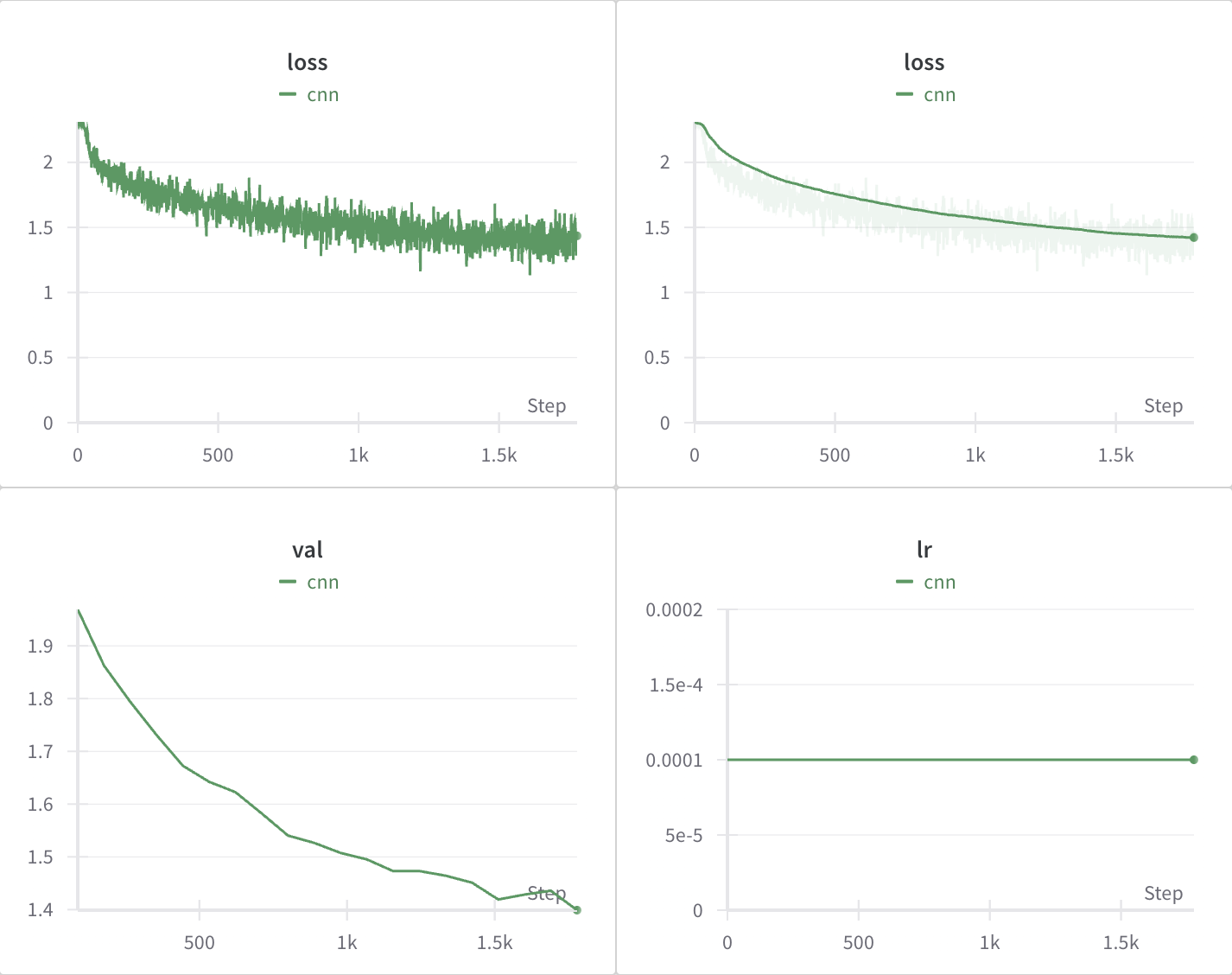

Using the hyperparameters below, the model is capable of achieving ~50% test accuracy on CIFAR-10.

| Hyperparameter | Value |

|---|---|

| EPOCHS | 20 |

| BATCH_SIZE | 128 |

| LEARNING_RATE | 1e-4 |

| Optimizer | Parameters |

|---|---|

| Adam | weight_decay = 0.01 |

Below is the wandb log of training the basic CNN model:

ResNet Architecture

This implementation utilizes residual connections to improve learning and allow us to build a deeper neural network, all whilst maintaining gradient flow. The original ResNet paper was referred to for implementation and technical details (He et al., 2015).

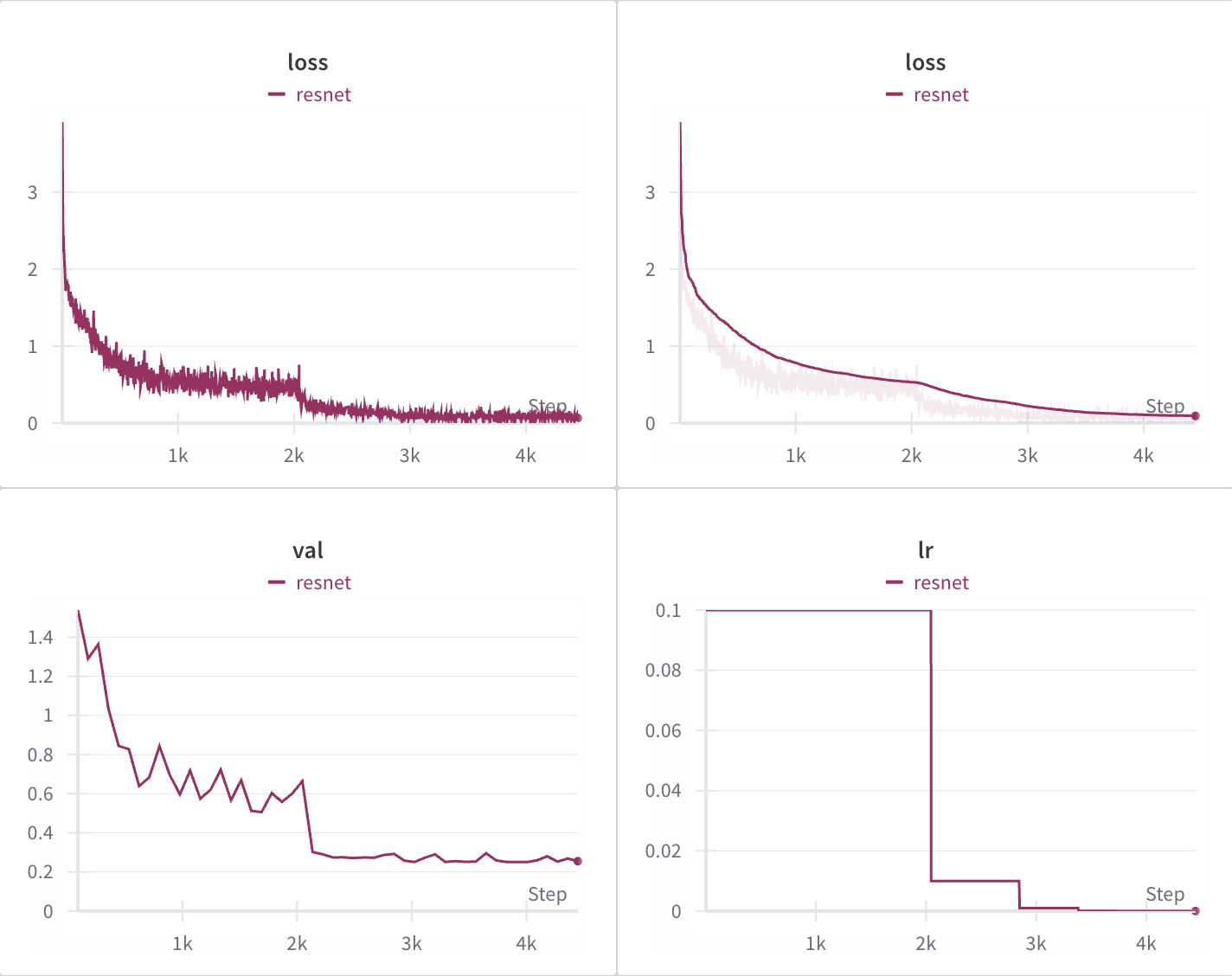

Using the hyperparameters below, the model is capable of achieving ~91% test accuracy on CIFAR-10.

| Hyperparameter | Value |

|---|---|

| EPOCHS | 50 |

| BATCH_SIZE | 128 |

| LEARNING_RATE | 0.1 |

| Optimizer | Parameters |

|---|---|

| SGD | momentum = 0.9, weight_decay = 5e-4, nesterov = True |

| Scheduler | Parameters |

|---|---|

| ReduceLROnPlateau | mode = max, factor = 0.1, patience = 3, threshold = 1e-3 |

Below is the wandb log of training the ResNet model:

Vision Transformer

The final implementation harnesses the expressive capabilities of transformers, especially with its utilization of self-attention (Dosovitskiy et al., 2021). Note that instead of patchifying the image and linear projecting, a convolutional layer is applied to obtain patch embeddings. This modification helps “increase optimization stability and also improves peak performance” as described in (Xiao et al., 2021).

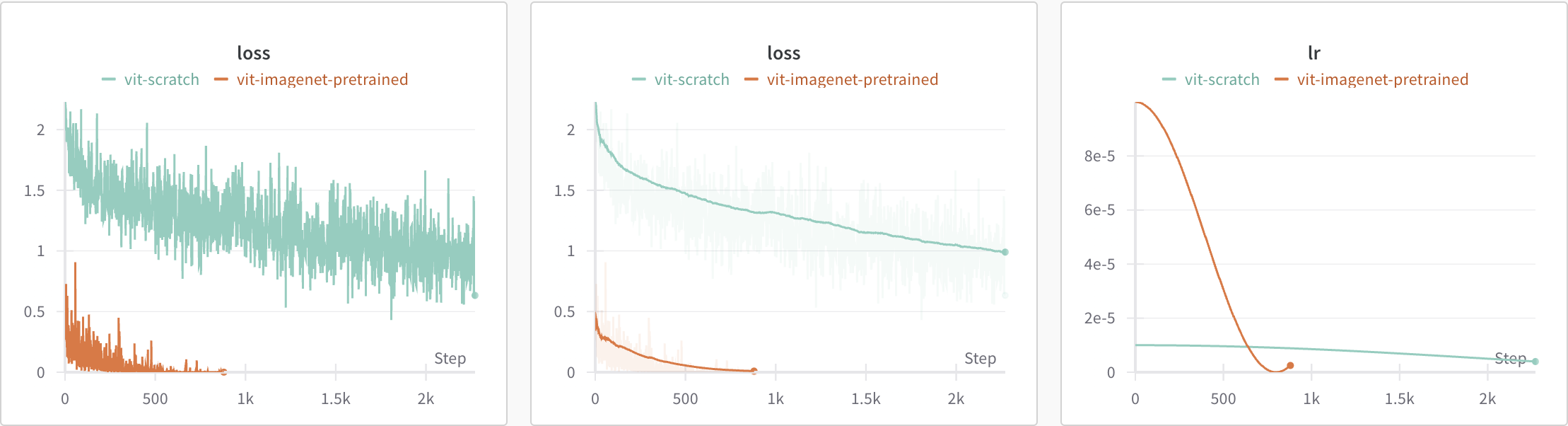

This project consists of both (1) fine-tuning a vision transformer pretrained on ImageNet and (2) training a vision transformer from scratch.

Using the hyperparameters below, the pretrained vision transformer can be fine tuned to achieve ~97.6% test accuracy (cross-validated) on CIFAR-10.

| Hyperparameter | Value |

|---|---|

| EPOCHS | 10 |

| BATCH_SIZE | 32 |

| LEARNING_RATE | 1e-4 |

| Optimizer | Parameters |

|---|---|

| Adam | momentum = 0.9, weight_decay = 1e-7 |

| Scheduler | Parameters |

|---|---|

| CosineAnnealingLR | T_max = 10 |

The same hyperparameters are used to train a vision transformer from scratch except the learning rate is reduced to 1e-5, a different learning rate scheduler was used, and longer training time (details to be added soon).

Below is the wandb log of losses and learning rate for both of these training sessions (fine tune and from scratch):

References

2021

- An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale2021

- Early Convolutions Help Transformers See Better2021

2015

- Deep Residual Learning for Image Recognition2015